Fermi Redux – Where is All the AI-Enabled Cybercrime? - 3 Quarks Daily

by Malcolm Murray

ad

Enrico Fermi famously asked – allegedly out loud over lunch in the cafeteria – “ Where is everybody?”, as he realized the disconnect between the large number of habitable planets in the universe and the number of alien civilizations we actually had observed.

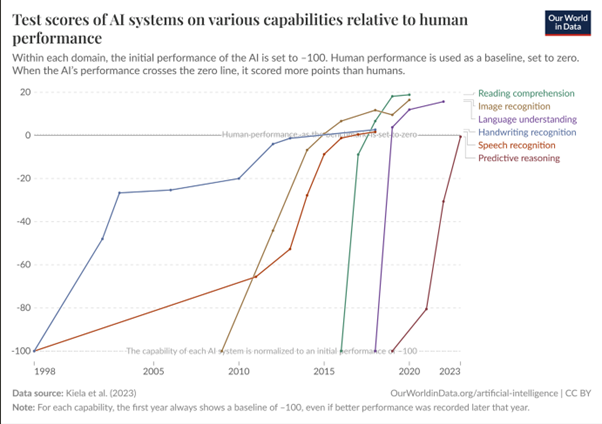

Today, we could in a similar vein ask ourselves, “Where is all the AI-enabled cybercrime?” We have now had three years of AI models scoring better than the average humans on coding tasks, and four years of AI models that can draft more convincing emails than humans. We have had years of “number go up”-style charts, like figure 1 below, that show an incessant growth in AI capabilities that would seem relevant to cybercriminals. Last year, I ran a Delphi study with cyber experts in which they forecast large increases in cybercrime by now. So we could have expected to be seeing cybercrime run rampage by now, meaningfully damaging the economy and societal structures. Everybody should already be needing to use three-factor authentication.

Figure 1 – LLM Capabilities ( Our World in Data)

Figure 1 – LLM Capabilities ( Our World in Data)

ad

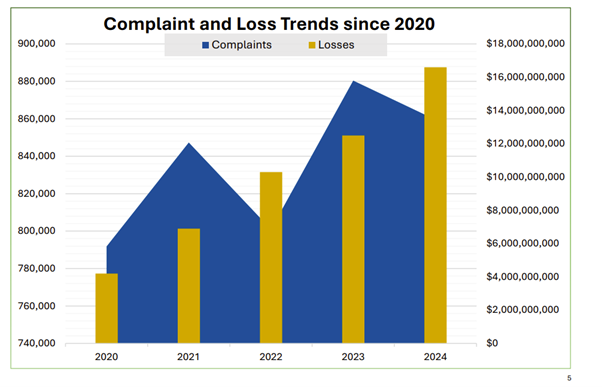

But we are not. The average password is still 123456. The reality looks more like figure 2. Cyberattacks and losses are increasing, but there is no AI-enabled exponential hump.

Figure 2 – Cost of Cybercrime ( FBI’s Internet Crime Complaint Center)

Figure 2 – Cost of Cybercrime ( FBI’s Internet Crime Complaint Center)

So we should ask ourselves why this is. This is both interesting in its own right, as cyberattacks hold the potential of crippling our digital society, as well as for a source of clues to how advanced AI will impact the economy and society. The latter seems much needed at the moment, as there is significant fumbling in the dark. Just in the past month, two subsequent Dwarkesh podcasts featured two quite different future predictions. First, Daniel Kokotajlo and Scott Alexander outlined in AI 2027 a scenario in which AGI arrives in 2027 with accompanying robot factories and world takeover. Then, we had Ege Erdil and Tamay Besiroglu describing their vision, in which we will not have AGI for another 30 years at least. It is striking how, while using the same components and factors determining AI progress, just by putting different amounts of weight on different factors, different forecasters can reach very different conclusions. It is like as if two chefs making pesto, both with basil, olive oil, garlic, pine nuts, cheese, but varying the weighting of different ingredients, both end up with “pesto,” but one of them is a thick herb paste and the other a puddle of green oil.

Below, I examine the potential explanations one by one and how plausible it is that they hold some explanatory power. Finally, I will turn to if these explanations could also be relevant to the impact of advanced AI as a whole.

Option 1: Defense

The first option is that the AI models are well-defended enough that cybercrime threat actors are not able to properly use them. This could plausibly be the case if it was only the most advanced models that were useful to cybercriminals, these could only be accessed through APIs and AI developers had strong monitoring and prevention controls in place on their APIs. This does not seem highly likely, however. Although the labs do a good job of monitoring API traffic and shutting down offenders, as detailed in e.g. OpenAI and Microsoft’s threat report, new jailbreak techniques are also being discovered continuously. Just recently, we saw a new jailbreak technique that could produce both CBRN and self-harm output in all major models. Further, open-source models have also crept closer to the frontier. The cyber capabilities of Llama 4 and DeepSeek are not that far behind the closed source models and as open-source models, they have very few controls in place preventing cybercriminals from using them.

Plausibility: Low

Option 2: The Adoption Curve

A second potential option is that we are just seeing slow adoption and diffusion of AI technology. However, this would go counter to recent trends, where every new technology has been adopted in shorter and shorter timeframes. Personal computers needed fourteen years to get 50 million users, Facebook ten months to hit one million users and ChatGPT only five days for a million users. Most recently, ChatGPT’s Ghiblification of the internet led them to add 1 million users in a single hour. So adoption curves seem to rather be shrinking, with less of a lag for every new product. Cybercriminals, who must be assumed to be one of the “ extremely online” parts of society, are unlikely to be slow adopters, waiting for an Apple-like market innovation that simplifies the product for the laggards. So this doesn’t seem very plausible either.

Plausibility: Low

Option 3: 4D Chess

Potentially, there could be a 4D chess-style option where cybercriminals are able to now increase the quality and quantity of their attacks, but would be cautiously waiting. According to this theory, they would be holding their cards close to their chest to not reveal them and trigger a regulatory crackdown, while awaiting the perfect opportunity to strike. However, this would seem to require highly coordinated action between a group of stakeholders that are unlikely to show collaborative tendencies.

Plausibility: Very low

Option 4: Real-World Messiness and Bottlenecks

A fourth option is that the benchmarks and eval results are misleading, as they test the AI systems in clean environments where tools are handed to the AI as needed rather than the messy real-world code bases an AI would have to work with in practice. When I referred to frontier AI models as having “super-human coding capabilities”, my coder friend laughed and said he had tried extensively to use Claude and found that it took him just as much time to clean up its errors than to do it himself. If the human work doesn’t actually diminish, but is just pushed downstream, it simply creates new bottlenecks in lieu of the old ones.

Plausibility: Medium-high

Option 5: Economics

A fifth option is that the economics are just not in place yet. We know that AI systems are highly competent coders, but we don’t have visibility into how cost-efficient they are relative to existing, human-driven cyberattacks. Cybercrime is already a highly efficient operation, with cybercrime syndicates operating like legit companies with hierarchical structures and optimized supply chains. It is also highly lucrative, with crime groups selling e.g. ransomware-as-a-service at high margins. It might well be that the cost of upsetting this existing state of affairs is higher than the perceived benefits of AI-enabled operations.

Plausibility: High

Option 6: Jagged Edge

Finally, a last option is the jagged frontier nature of today’s AI systems. AI still offers an incomplete solution. The parts of a cyberattack can be captured in the sequential steps of Lockheed Martin’s Cyber Kill Chain. This shows how an attack goes from Reconnaissance to Weaponization and on to later steps like Command and Control before reaching Action on Objectives. Each of these steps is conditional on earlier ones succeeding. An attacker can’t achieve lateral movement if they haven’t established a foothold in the first place. Currently, we have LLM benchmarks demonstrating high AI performance on the early steps – reconnaissance (e.g. writing a convincing spear phishing email) and weaponization (writing malware), but we know very little of how much AI can help with the later steps. Similar to how it took decades for factories to redesign their workflow to capture the benefits of electricity, we might be looking at cybercriminals taking a long time to retool their processes to fit in the AI puzzle piece.

Plausibility: High

A combination of the three last options seems likely to explain a large part of this disconnect. The super-human performance of AI models on benchmarks does not translate one-to-one to real-world usefulness, especially as they only help with parts of the kill chain, and new bottlenecks appear instead of the old ones. Given that the non-LLM economics are already highly attractive, the cost/benefit ratio of retooling workflows to accommodate AI is not there yet.

This can likely provide useful lessons as we think about AI 2027 vs later AGI scenarios. For AI to be adopted at scale and truly transform science or the economy, it might need to offer an end-to-end solution, not create new bottlenecks and visibly improve on the economics of the existing workflows. This might speak against the AI 2027 scenario where the AI R&D is assumed to be able to tackle the entire research and development as well as production workflow.

Enjoying the content on 3QD? Help keep us going by donating now.

Disqus Comments

We were unable to load Disqus. If you are a moderator please see our troubleshooting guide.

G

Join the discussion…

Comment

Log in with

or sign up with Disqus or pick a name

Disqus is a discussion network

- Don’t be a jerk or do anything illegal. Everything is easier that way.

Read full terms and conditions

By clicking submit, I authorize Disqus, Inc. and its affiliated companies to:

- Use, sell, and share my information to enable me to use its comment services and for marketing purposes, including cross-context behavioral advertising, as described in our Terms of Service and Privacy Policy

- Supplement the information that I provide with additional information lawfully obtained from other sources, like demographic data from public sources, interests inferred from web page views, or other data relevant to what might interest me, like past purchase or location data

- Contact me or enable others to contact me by email with offers for goods and services (from any category) at the email address provided

- Process any sensitive personal information that I submit in a comment for the purpose of displaying the comment

-

Retain my information while I am engaging with marketing messages that I receive and for a reasonable amount of time thereafter. I understand I can opt out at any time through an email that I receive. Companies that we share data with are listed here.

-

Discussion Favorited!

Favoriting means this is a discussion worth sharing. It gets shared to your followers’ Disqus feeds, and gives the creator kudos!

-

Tweet this discussion

-

Share this discussion on Facebook

-

Share this discussion via email

-

Copy link to discussion

- +

- Flag as inappropriate

Thanks for this very interesting analysis, Malcolm!

see more

Interesting and useful overview of the state of the art (or should I say, mal-art).

see more

gum.criteo.com

gum.criteo.com is blocked

This page has been blocked by an extension

- Try disabling your extensions.

ERR_BLOCKED_BY_CLIENT

Reload

This page has been blocked by an extension

X

Topics Frame