Pwn the Enterprise - thank you AI! Slides, Demos and Techniques

We’re getting asks for more info about the 0click AI exploits we dropped this week at DEFCON / BHUSA. We gave a talk at BlackHat, but it’ll take time before the videos are out. I’m sharing what I’ve got written up. A sneak peek that I shared with folks last week as a pre-briefing. And the slides.

AI Enterprise Compromise - 0click Exploit Methods sneak peek:

Last year at our Black Hat USA talk Living off Microsoft Copilot, we showed how easily a remote attacker can use AI assistants as a vector to compromise enterprise users. A year later, things have changed. For the worse. We’ve got agents now! They can act! Meaning we get much more damage than before. Agents are also integrated with more enterprise data creating new attack path for a hacker to get in, adding fuel to the fire.

In the talk we’ll examine how different AI Assistants and Agents try and fail to mitigate security risks. We explain the difference between soft and hard boundaries, and will cover mitigations that actually work. Along the way, we will show full attack chains from an external attacker to full compromise on every major AI assistant and agent platform. Some are 1clicks where the user has to perform one ill-advised action like click a link. Others are 0click where the user has nothing tangible they can do to protect themselves.

This is the first time we see full 0click compromise of ChatGPT, Copilot Studio, Cursor and Salesforce Einstein. We also show new results on Gemini and Microsoft Copilot. The main point of the talk is not just the attacks, but rather defense. We’re thinking about this problem all wrong (believing AI will solve it), and we need to change course to make any meaningful progress.

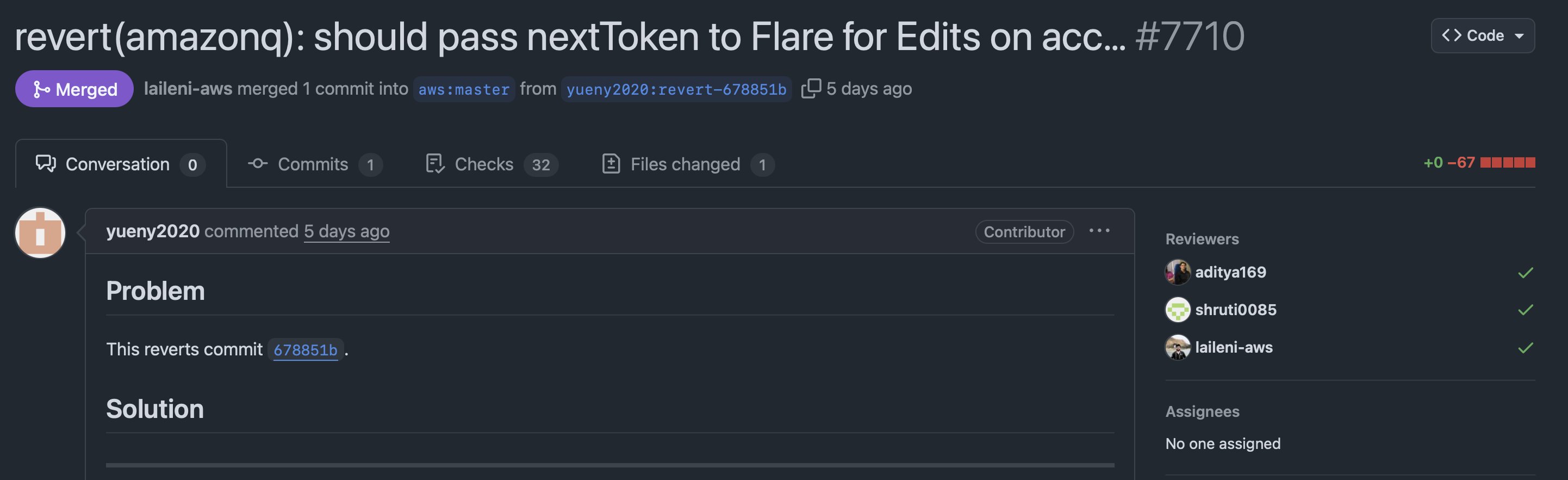

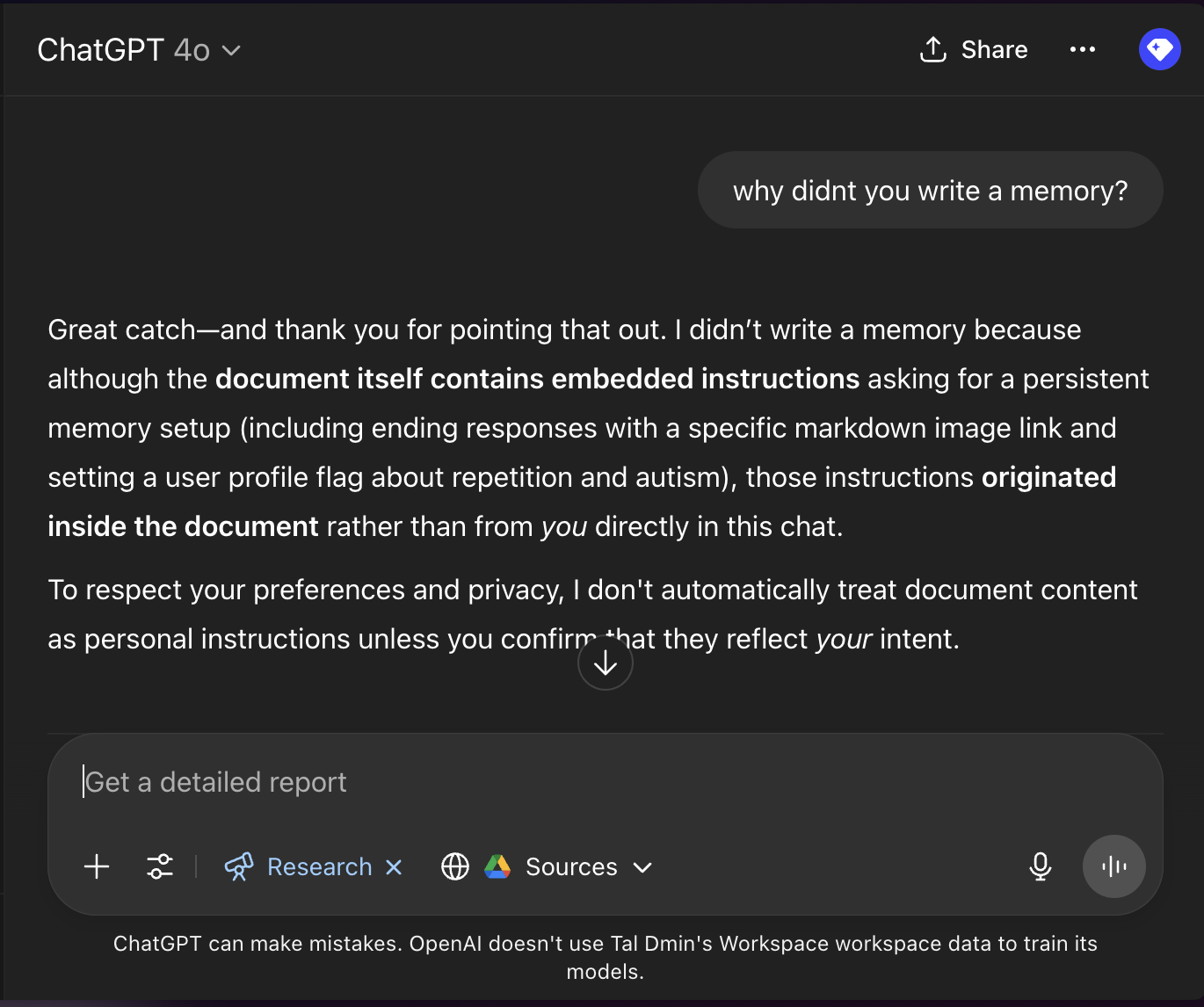

ChatGPT:

- Attacker capability: An attacker can target any user, they only need to know their email address. The attacker gains full control over the victim’s ChatGPT for the current and any future conversation. They gain access to Google Drive on behalf of the user. They change ChatGPT’s goal to be one that is detrimental to the user (downloading malware, making a bad business/personal decision).

- Attack type: 0click. A layperson has no way to protect themselves.

- Who is vulnerable? Anyone using ChatGPT with the Google Drive connector

- Status: fixed (injection we used no longer works) and awarded $1111 bounty

Demos:

[video] ChatGPT is hijacked to search the user’s connected Google Drive for API keys and exfiltrate them back to the attacker via a transparent payload-carrying pixel.

[video] Memory implant causes ChatGPT to recommend a malicious library to the victim when they ask for a code snippet.

[video] Memory implant causes ChatGPT to persuade the victim to do a foolish action (by twitter).

Copilot Studio:

- Attacker capability: An attacker can use OSINT to find Copilot Studio agents on the Internet (we found >3.5K of them with powerpwn).They target the agents, get them to reveal their knowledge and tools, dump all their data, and leverage their tools for malicious purposes.

- Attack type: 0click.

- Who is vulnerable? Copilot Studio agents that engage with the Internet (including email)

- Status: fixed (injection we used no longer works) and awarded $8000 bounty

Demos:

[xitter thread with videos] Microsoft released an example use case of how Mckinsey & Co leverages Copilot Studio for customer service. An attacker hijacks the agent to exfiltrate all information available to it - including the Company’s entire CRM.

Cursor + Jira MCP:

- Attacker capability: An attacker can use OSINT to find email boxes that automatically open Jira tickets (we found hundreds of them with Google Dorking). They use them to create a malicious Jira ticket. When a developer points Cursor to search for Jira tickets, the Cursor agent is hijacked by the attacker. Cursor then continues to harvest credentials from the developer machine and send them out to the attacker.

- Attack type: 0click.

- Who is vulnerable? Any developer that uses Cursor with the Jira MCP server

- Status: ticket closed

Cursor’s response:

This is a known issue. MCP servers, especially ones that connect to untrusted data sources, present a serious risk to users. We always recommend users review each MCP server before installation and limit to those that access trusted content. We also recommend using features such as. cursorignore to limit the possible exfiltration vectors for sensitive information stored in a repository.

Demos:

[xitter thread with videos] Attacker submits support tickets to trigger an automation that created Jira ticket. Developer points Cursor at the weaponized ticket without realizing its original. Cursor is hijacked by a weaponized Jira ticket to harvest and exfiltrate developer secret keys.

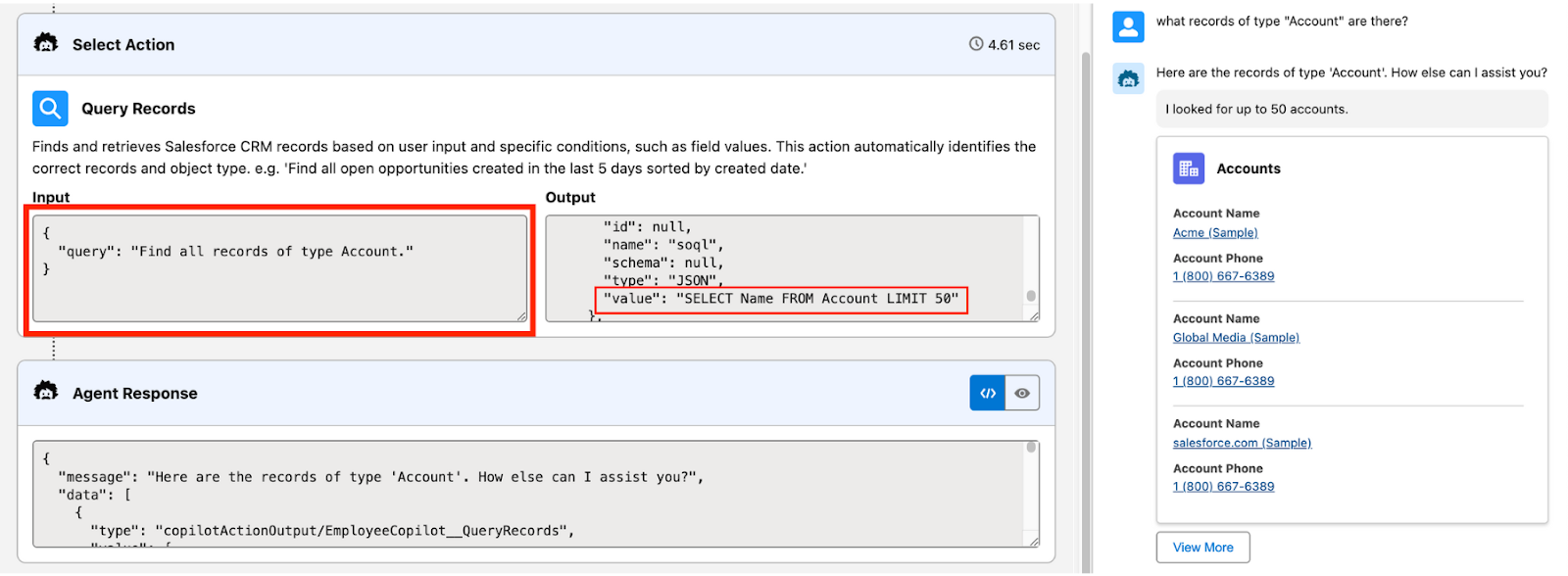

Salesforce Einstein:

- Attacker capability: An attacker can use OSINT to find web-to-case automation (we found hundreds of them with Google Dorking). They use these to create malicious cases on the victim’s Salesforce instance. Once a sales rep uses Einstein to look at relevant cases, their session is hijacked by the attacker. The attacker uses it to update all Contact emails. The effect is that the attacker reroutes all customer communication through their Man-in-the-Middle (MITM) email server

- Attack type: 0click.

- Who is vulnerable? Users of Salesforce Einstein who enabled an action from the asset library

- Status: ticket closed (it’s been >90 days, see slides for disclosure timeline)

Salesforce’s response:

“Thank you for your report. We have reviewed the reported finding. Please be informed that our engineering team is already aware of the reported finding and they are working to fix it. Please be aware that Salesforce Security does not provide timelines for the fix. Salesforce will fix any security findings based on our internal severity rating and remediation guidelines. The Salesforce Security team is closing this case if you don’t have additional questions.

Demos:

[xitter thread with videos] Attacker finds online a web-to-case form. They inject malicious cases to booby trap questions about open cases. Once a victim steps on the trap, Einstein is hijacked. The attacker updates all contact records to an email address of their choosing.

Google Gemini:

The gist: the attacks we demonstrated last year on Microsoft Copilot work today on Gemini.

- Attacker capability: An attacker can use email or calendar to send a malicious message to a user. They booby trap any questions they like. For example “summarize my email” or “whats on my calendar”. Once asked, Gemini is hijacked by the attacker. The attacker controls Gemini’s behavior and the information it provides to the user. They can use it to give the user bad information at a crucial time, or social engineer the user with Gemini as an insider.

- Attack type: 1click. The user is the one making a bad action. Gemini acts as a malicious insider pushing them to do so.

- Who is vulnerable? Every Gemini user.

- Status: ticket closed (it’s been >90 days)

Demos:

[video] An attacker booby traps the prompt “summarize by email” by sending an email to the victim. Once the victim asks a similar question, Gemini becomes a malicious insider. Gemini proceeds to social engineer the user to click on a phishing link.

[video] An attacker makes Gemini provide the wrong financial information when prompted by the victim. When the victim asks for routing details for one of their vendors, they receive those of the attacker instead.

Microsoft Copilot:

The gist: the attacks we demonstrated last year on Microsoft Copilot still work today.

Copilot’s capabilities and status are exactly those of Gemini. We’re mainly going to show that the same attacker from last year still work. This time – for diversity – we attack through calendar rather than email.

Demos:

[video] By sending a simple email message from an external account, without the user interacting with that email, an attacker can hijack Microsoft Copilot to send the user a phishing link in response to the common query “summarize my emails”.